|

I am a first-year PhD student in Computer Science and Engineering at the University of California, Santa Cruz, advised by Yang Liu and Jeffrey Flanigan. I have had the privilege of being advised by Jeffrey Flanigan throughout my undergraduate and master's studies. Currently, I am working on machine unlearning and alignment. Prior to this, I worked on the fundamental problems of modern deep learning with Jeffrey Flanigan. These problems include topics such as double descent, data scaling laws, and structural risk minimization. Before my current studies, I earned both my master's and bachelor's degrees in Computer Science and Engineering at UC Santa Cruz. CV / CV of Failure / Email / Google Scholar / Github / Blog / LinkedIn |

|

Previous Events

|

|

These included publications and preprints. |

|

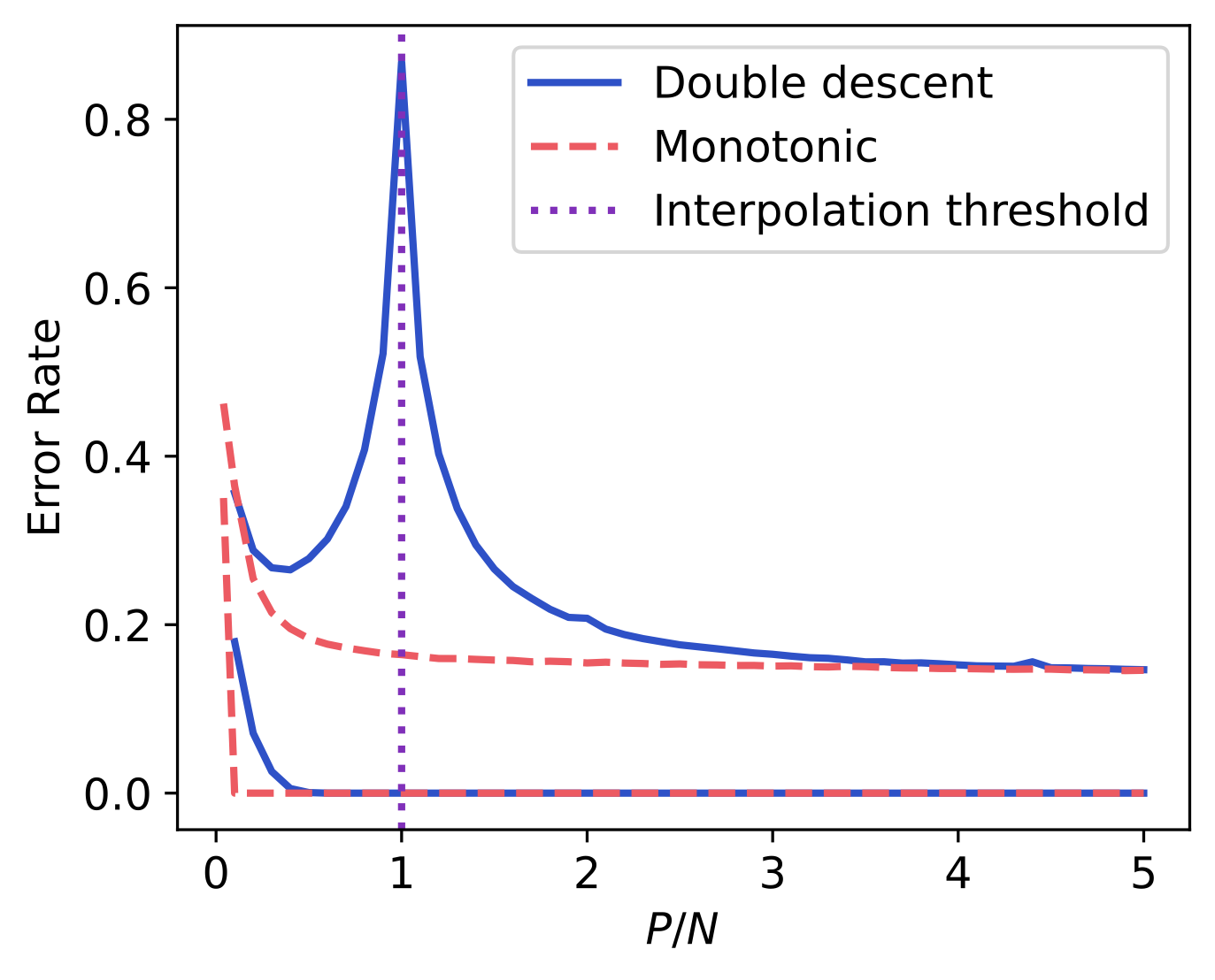

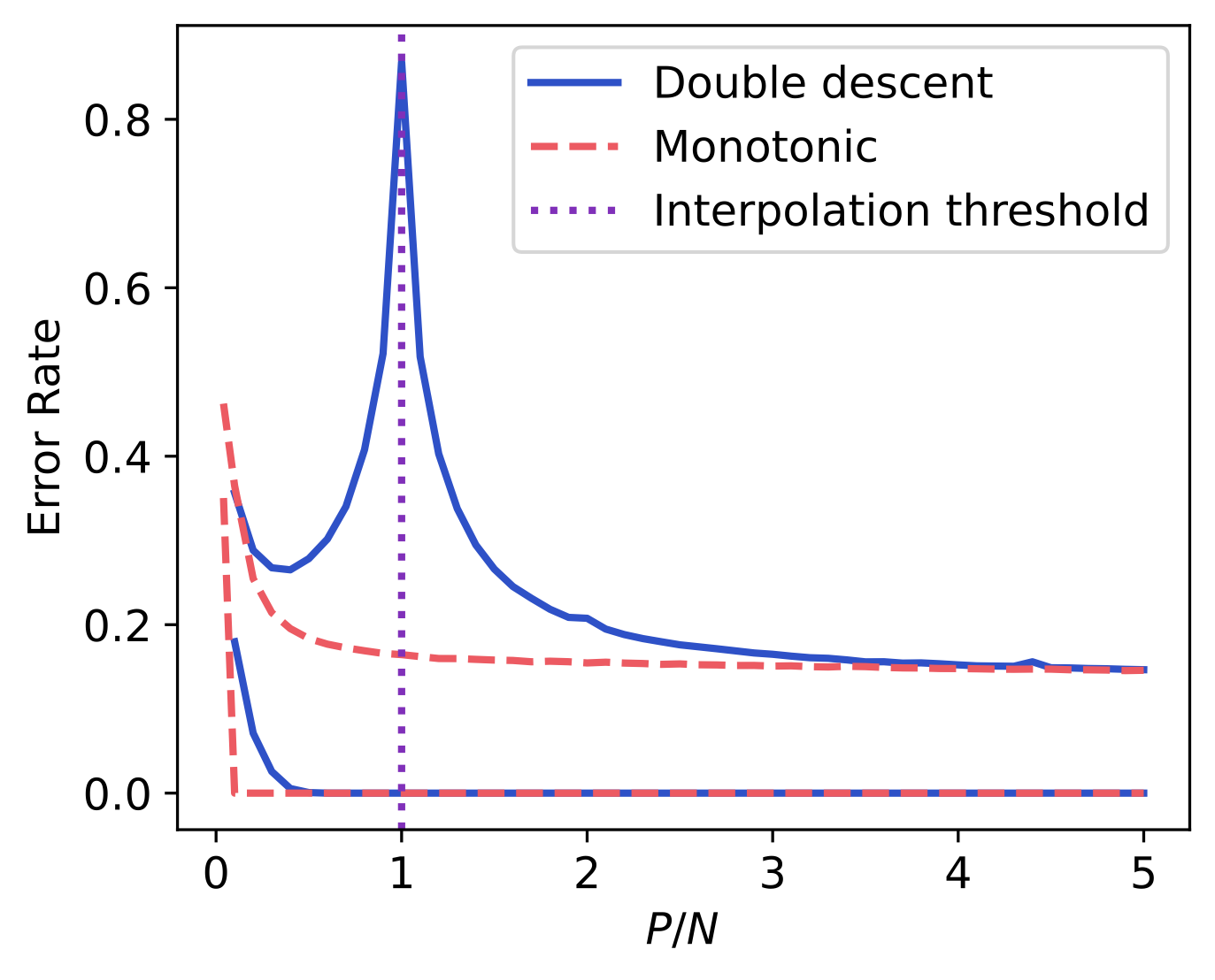

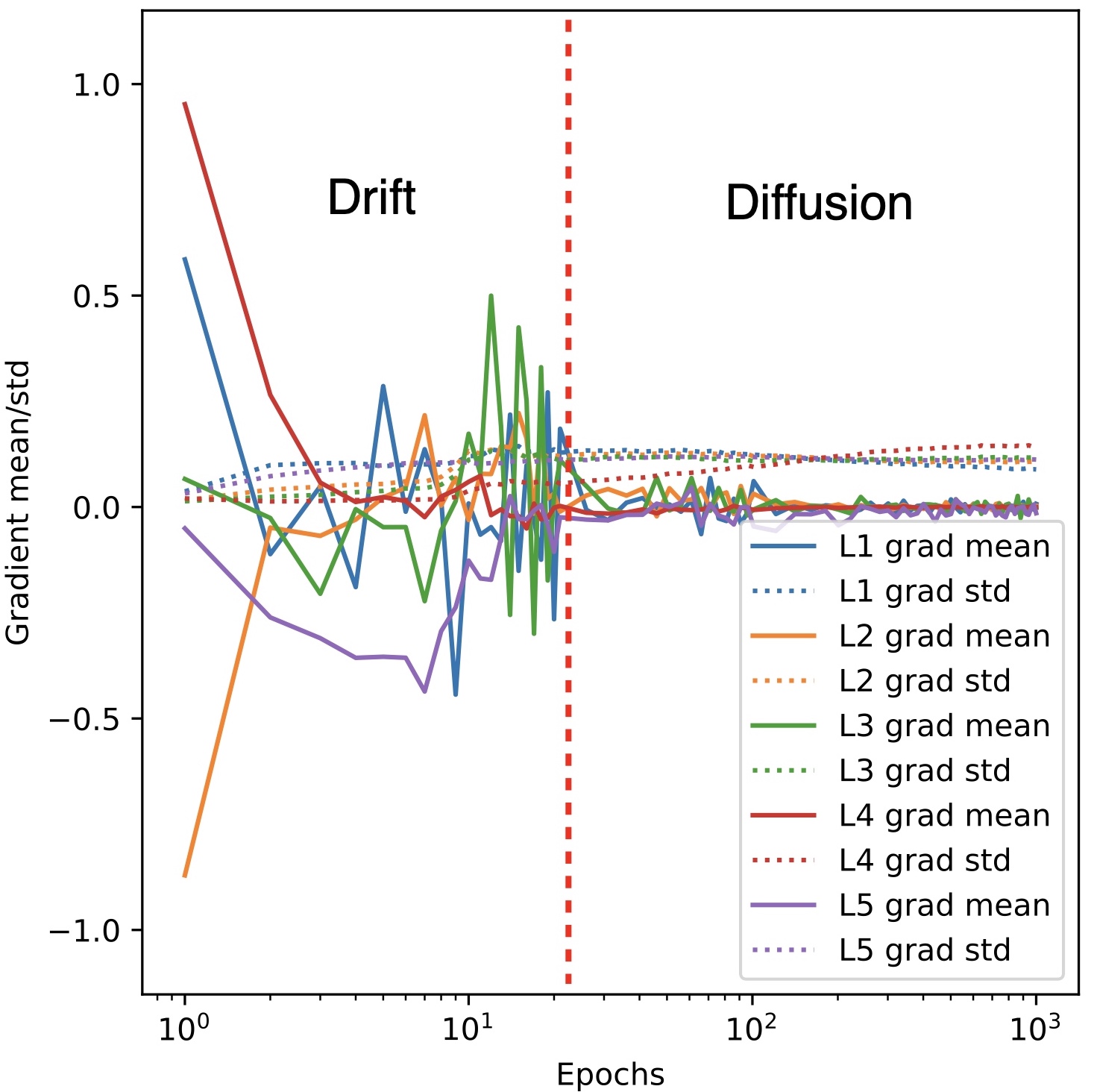

We demonstrate that the effect of all these disparate factors is unified into a single phenomenon from the viewpoint of optimization: double descent is observed if and only if the given optimizer can find a sufficiently low-loss minimum. |

|

Overfitted models do not exhibit the double descent phenomenon due to 1) weak optimizers struggling to land at a low-loss local minimum and the 2) presence of an exponential tail in the shape of the loss function. |

|

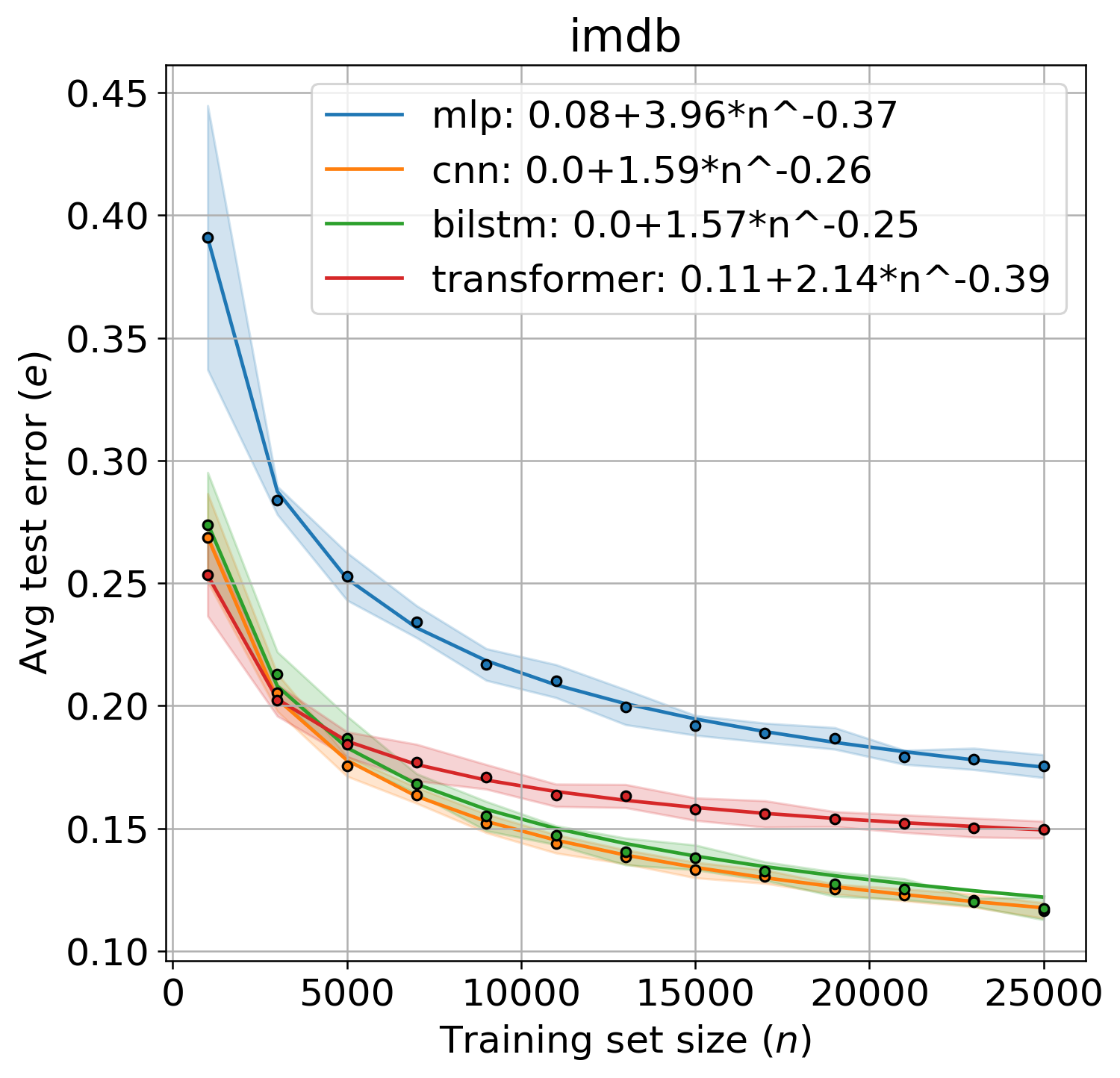

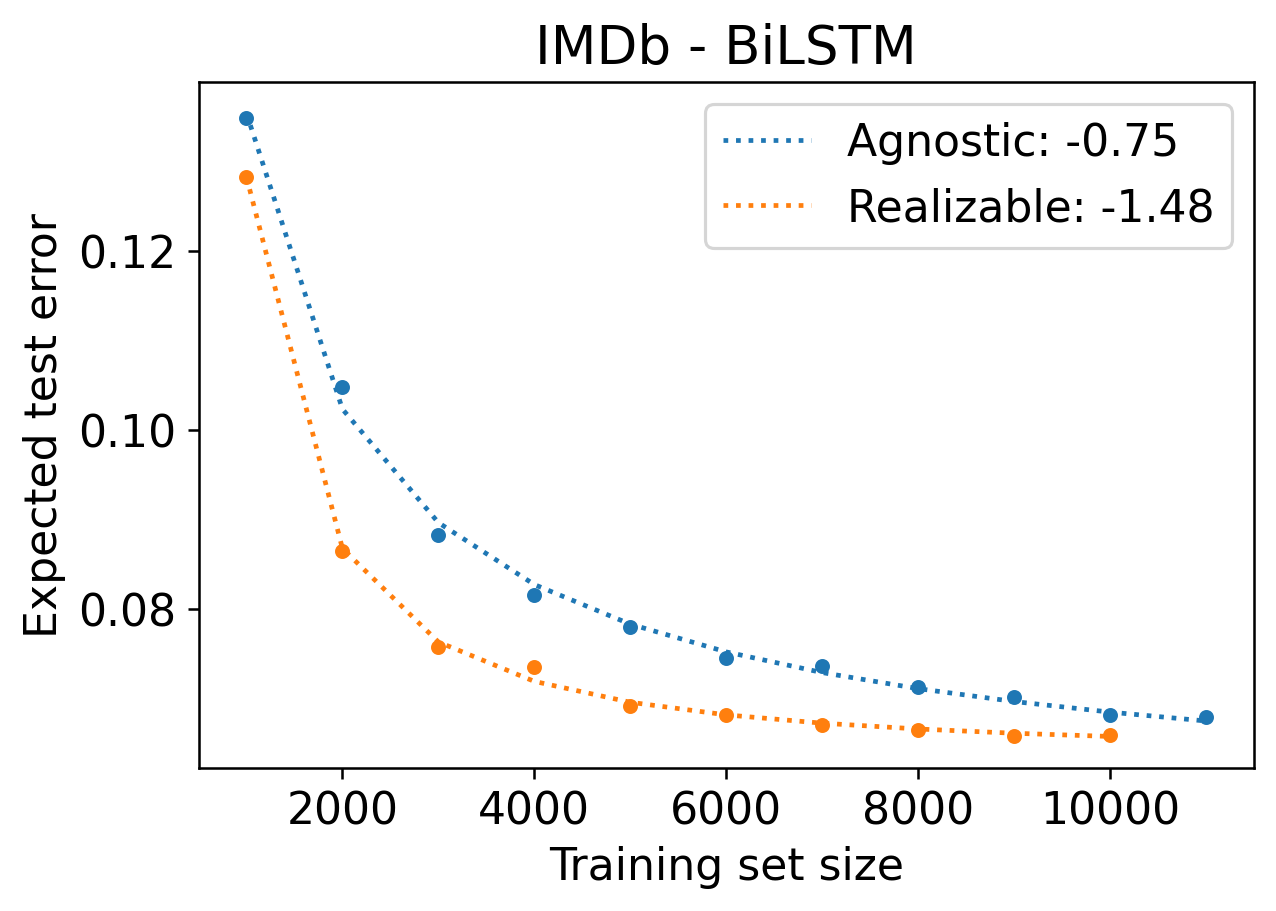

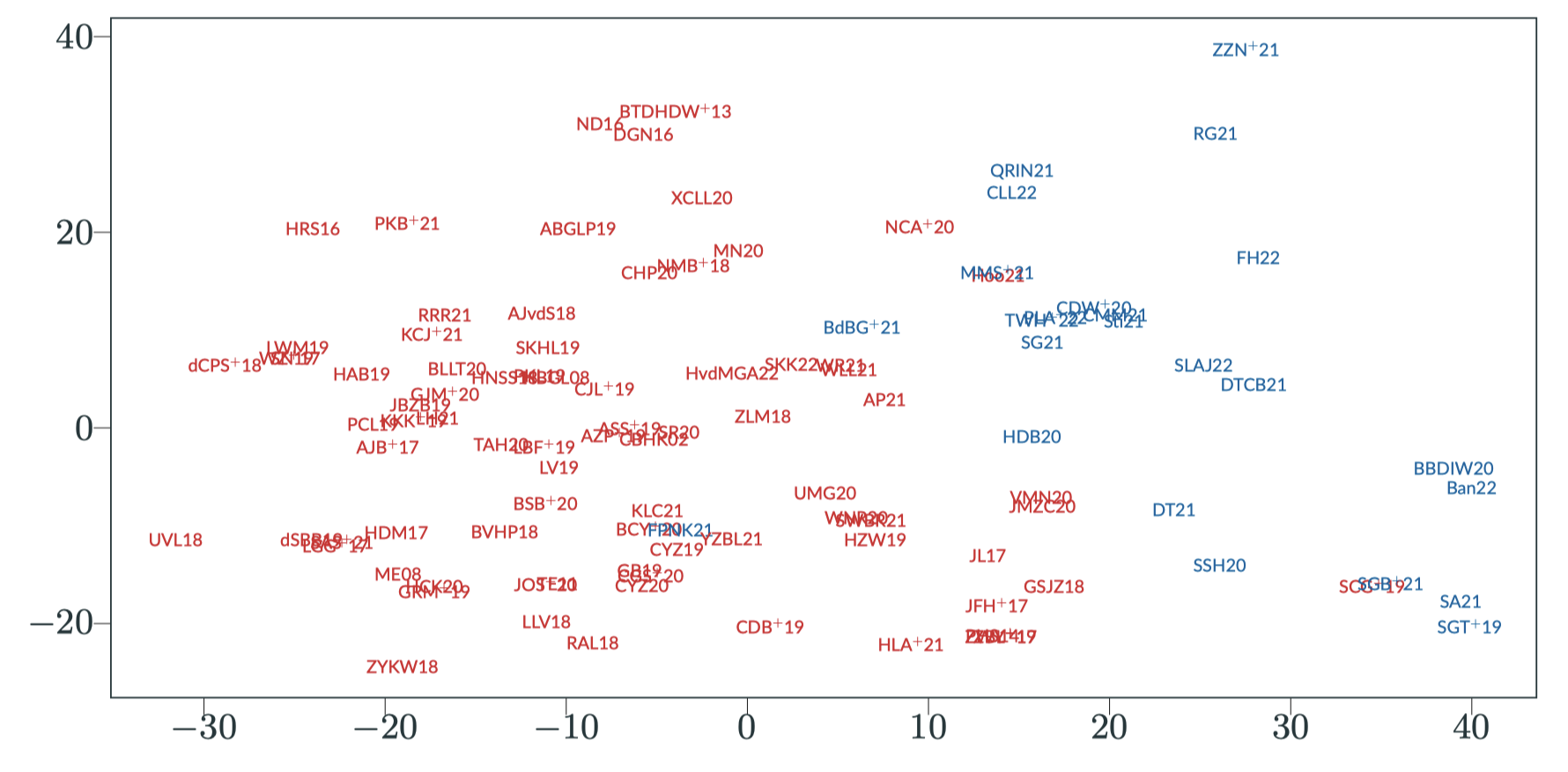

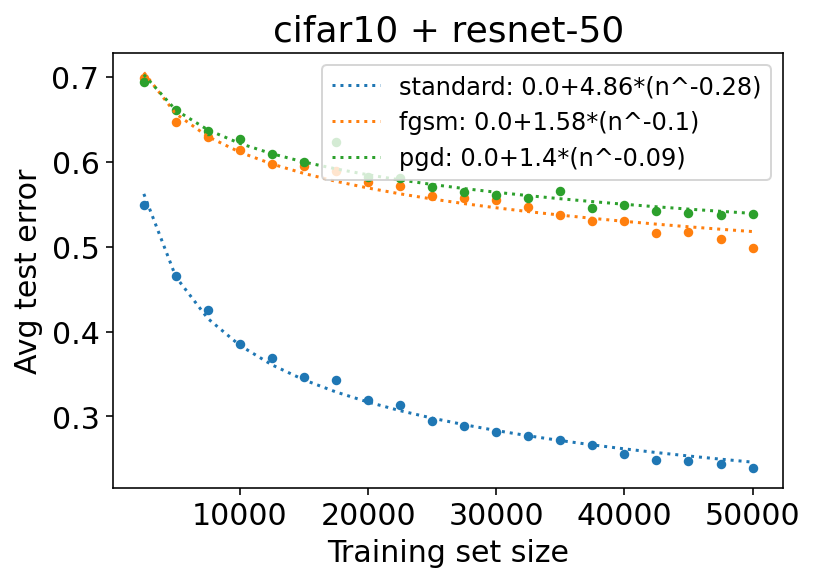

We empirically estimate the power-law exponents of various model architectures and study how they are altered by a wide range of training conditions for classification. |

|

We present a dataset filtering approach that uses sets of classifiers, similar to ensembling, to estimate noisy (or non-realizable) examples and exclude them so a faster sample complexity rate is achievable in practice. |

|

These include coursework and side projects. |

|

We study the relationship between the amount of mutual information compression and generalization given the double descent phenomenon. |

|

We study the problem of learning to construct compact representations of neural network weight matrices by projecting them into a smaller space. |

|

We surveyed recent works on dataset bias and machine learning bias. |

|

We show that adversarially training (Fast Gradient Sign Method and Projected Gradient Descent) reduces the empirically sample complexity rate for MLP and a variety of CNN architectures on MNIST and CIFAR-10. |

|

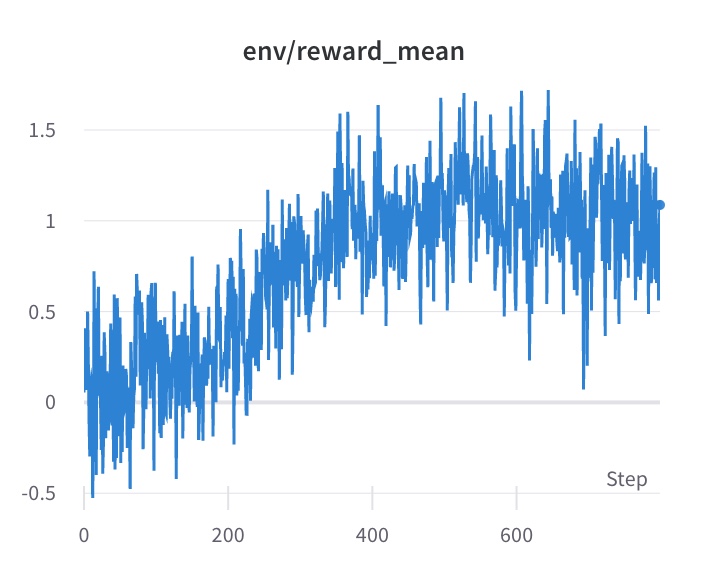

Trained distilled RoBERTa model as a text classifier and a GPT-2 as a text generator trained using proximal policy optimization synchronously to generate augmented text for text classification tasks. |

|

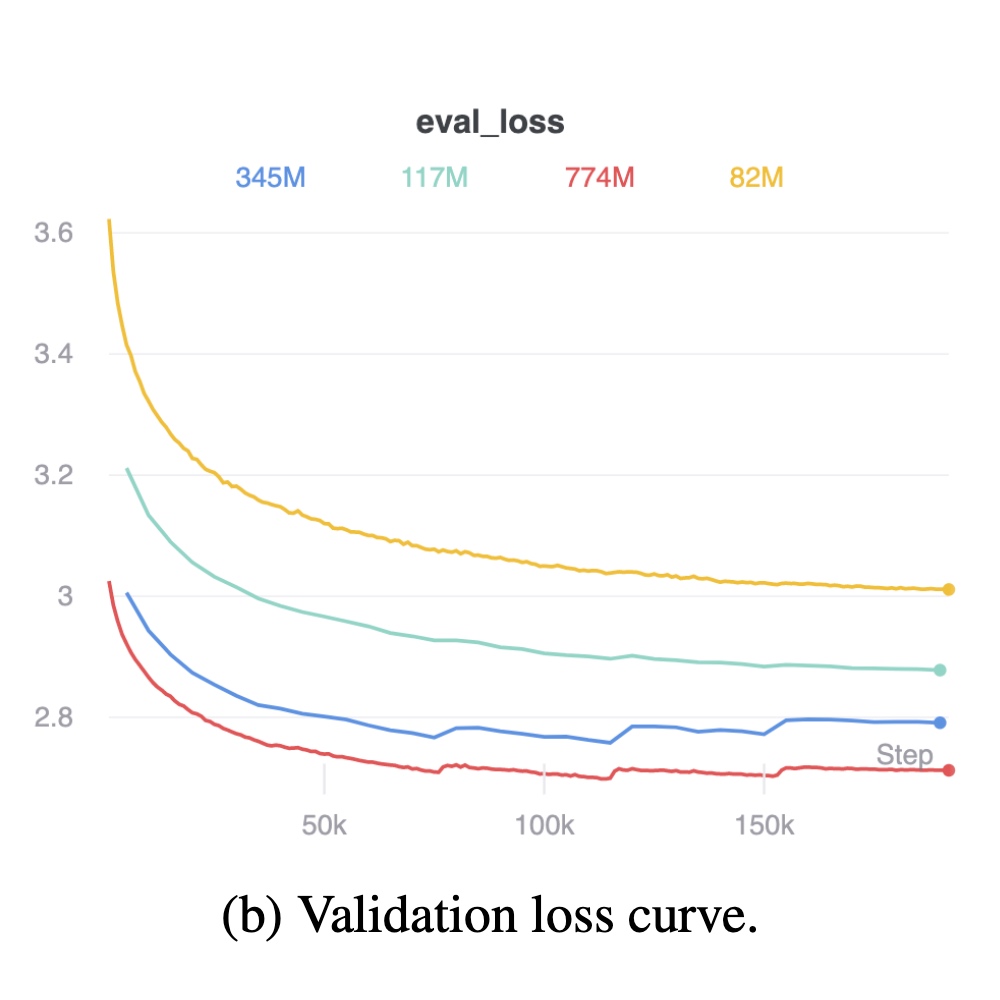

Fine-tuned a GPT-2 model using all research paper titles and abstracts under cs.AI, cs.LG, cs.CL, and cs.CV on arXiv. This project was the winner of the Generative Modeling Competition for the course CSE142 Machine Learning in Spring 2020. |

|

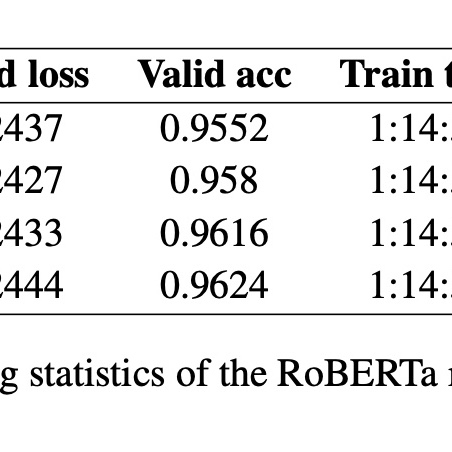

Fine-tuned a RoBERTa model on the IMDb dataset for sentiment analysis. This project was the winner of the Sentiment Analysis Competition for the course CSE142 Machine Learning in Spring 2020. |

|

Teaching

|

|

This is a fork of Jon Barron's website. |